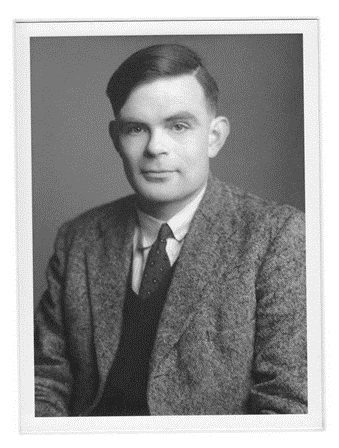

Alan Turing first proposed his test of machine intelligence in 1950. He described an “imitation game” in which a human evaluator judges a conversation between a machine and another human. If the evaluator cannot distinguish between the human and the machine, the machine passes the Turing test.

Turing was one of the early fathers of modern computing in the history of NLP. In addition to the Turing test, many concepts bear his name, such as the Turing machine (a theoretical model of computing) and the Turing Award (an annual prize for computer science). Turing also led the British team that deciphered the Enigma machine during World War II, which allowed the Allies to read intercepted Axis transmissions, and served as the subject matter for the 2014 film, The Imitation Game.

The history of NLP is filled with stories of pioneers like Alan Turing. Since Turing first proposed his namesake test, generations of NLP researchers have taken up the challenge of creating NLP systems that could pass it. The field has seen numerous advances, culminating in 2022 with the release of ChatGPT, which became the first AI application to truly capture the attention of mainstream users.

NLP has seen four eras:

- The symbolic era (1950s to late 1980s)

- The statistical era (1980s to early 2000s)

- The neural network era (late 1990s to late 2010s)

- The LLM era (2018 – present)

A common misconception is that because technology rapidly advances and breakthroughs can quickly become obsolete, there is therefore no value in understanding earlier methods. As a result, many people believe that we should always focus on the latest advancements.

However, each era introduced methods that are still used today. Those methods have interesting business applications that remain relevant in many contexts.

Therefore, when discussing the history of NLP, we should focus not only on individual pioneers and their breakthroughs but also on the practical, business applications of AI that endure today because of their breakthroughs.

We continue our journey through the history of NLP at MIT.

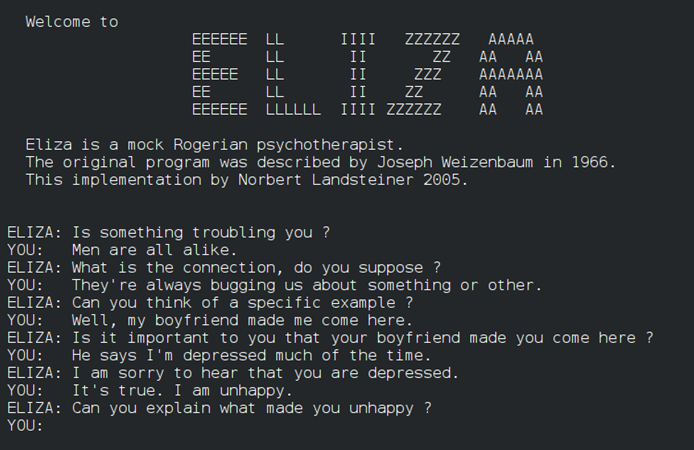

From 1964 to 1967, computer scientist and MIT professor Joseph Weizenbaum developed a natural language program which he named ELIZA. Weizenbaum took inspiration from the Rogerian school of psychotherapy, in which the therapist encourages self-reflection by rephrasing a patient’s words in the form of a question.

By manipulating user input to create the illusion of understanding and empathy, ELIZA displayed remarkably human-like responses. Weizenbaum was surprised at how quickly people became emotionally attached.

Once, when his secretary started to chat with ELIZA, she asked him to leave the room. Although she had seen Weizenbaum develop the program and knew it was only a program, she still wanted to confide in ELIZA in private.

ELIZA was one of the earliest attempts at the Turing test. It was a star of the first age of NLP: the symbolic era. For the next 30 years, computer scientists improved upon ELIZA’s foundations by creating more complex language rules, more elaborate knowledge representations, and more sophisticated pattern matching and substitution.

However, rules-based approaches required explicit descriptions of every linguistic rule, which proved to be infeasible. Gradually, newer approaches would replace them, with the advent of the statistical era.

Nevertheless, the symbolic era left an important legacy in the broader IT community. For example, it paved the way for the advent of business rules engines.

Introduced in the 1990’s, business rules engines allowed companies to codify the logic of a business process. The engines provided a way for business analysts, rather than professional programmers, to read and edit the business logic of IT applications.

Therefore, by applying the rules-based approaches for NLP to business processes, technologists created a novel way for businesses to manage their IT complexity.

In 1984, Peter Brown joined an IBM research group working on NLP. After several years, his team, which included computer scientist Robert Mercer, introduced a set of IBM alignment models for statistical machine translation.

The team used existing parallel text material to train their models, such as English-French transcripts of Canadian parliamentary debates. In contrast to the rules-based approach of the symbolic era, the IBM alignment models relied on statistical signals from the probabilities inherent in parallel text sources to infer linguistic rules.

They were more successful than the symbolic approaches that had difficulty handling ambiguity, variability, and previously unseen data.

In 1993, Brown and Mercer left IBM to join the hedge fund Renaissance Technologies. They translated their statistical approach to trading signals, portfolio optimization, risk management, and market predictions.

From 1988 to 2018, Renaissance’s flagship, the Medallion fund, had the best track record on Wall Street, returning 66% annually (39% after fees). Later, Brown and Mercer would become co-CEOs of Renaissance.

While their work in statistical machine translation may not have directly applied to hedge fund trading, their statistical expertise was pivotal in Renaissance Technologies’ success.

Eventually, statistical methods for NLP were hampered by the amount of training data available and the inability to deal with contextual information. They treated words independently or in small clusters, which ignored the context and dependencies that words could have. As a result, newer methods, based on the neural network, would emerge to replace statistical methods.

Warren McCulloch and Walter Pitts first proposed the concept of a neural network in 1943. Modeled after a simple circuit and inspired by the human brain, they theorized that a network of neurons could provide an alternative to the traditional model of computation of their era.

As with many great ideas ahead of their time, the McCulloch-Pitts neuron would remain in the realm of theoretical computer science for decades, hampered by a lack of computing power and data.

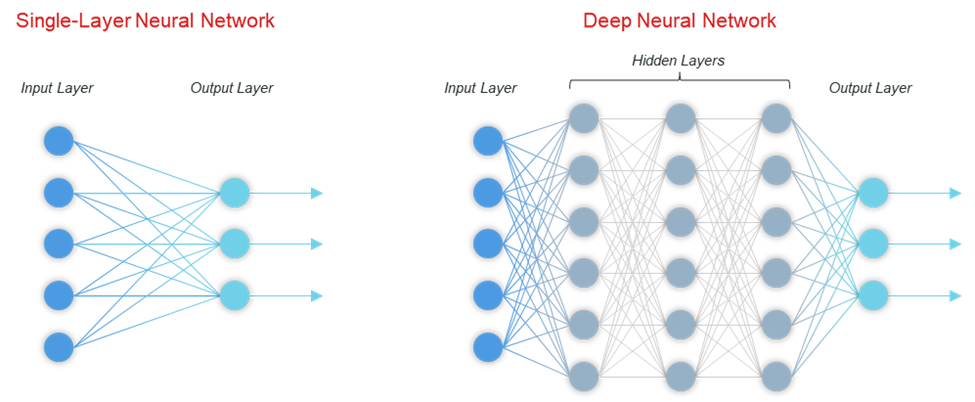

Early implementations of neural networks had a single layer. As computing power and data sets grew, researchers in the 1980s experimented with deep neural networks, containing multiple hidden layers.

The first breakthrough occurred in 1989. Yann LeCun led a team of researchers to train a deep neural network to recognize handwritten ZIP codes on mailing envelopes. Their research formed the basis of the convolutional neural network (CNN), in which each hidden layer performs a “convolution” of the previous layer, to extract high-level features of an image.

The last output layer identifies the image (e.g., a single digit from 0 to 9). The CNN is now the basis for modern image recognition algorithms.

At the same time, another deep neural network gained traction for time-series data and natural language sentences. Called recurrent neural networks (RNN), they process data by allowing input to “recur” multiple times for later outputs. RNNs addressed many of the limitations of the NLP models of the statistical era and established a new standard in NLP.

Today, RNNs form the basis for NLP approaches such as speech recognition. However, they were slow, expensive to train, and still treated words independently, struggling with relationships between words that are far apart in a sentence.

In 2018, Yoshua Bengio, Geoffrey Hinton, and Yann LeCun won the ACM Turing Award for their research on deep neural networks. Considered the “Nobel Prize of computer science,” the award takes its name from Alan Turing, who proposed his Turing test for machine intelligence decades earlier.

Bengio, Hinton, and LeCun laid many of the foundations for generative AI and large language milestone. ChatGPT was the first AI application that truly captured the attention of mainstream users.

ChatGPT had three improvements over earlier deep neural networks:

- The transformer architecture;

- Generative pretraining; and

- Reinforcement learning from human feedback (RLHF)

First, RNNs were slow and expensive to train and run. In 2017, researchers at Google proposed a new deep-learning architecture known as the transformer. Whereas RNNs relied on a memory mechanism, the transformer introduced a new attention mechanism.

Transformers performed both faster and more accurately. The attention-based transformer now serves as the main architecture for LLMs.

Second, in 2018, researchers at OpenAI proposed a revolutionary approach to NLP called generative pretraining. Earlier approaches to training LLMs required large amounts of manually labeled data, which restricted their applicability. In contrast, generative pretraining is a 2-step process, where they first train an LLM on unlabeled data andmodels (LLMs).

Building on those foundations, OpenAI released ChatGPT on November 30, 2022. In just five days, ChatGPT acquired 1 million users, becoming the fastest-ever application to reach that then fine-tune the LLM on a smaller body of labeled data specific to a particular task.

OpenAI’s initial versions of GPT were foundational models, LLMs that could be fine-tuned to specific tasks. In 2022, OpenAI fine-tuned two LLMs through reinforcement learning from human feedback (RLHF):

- InstructGPT, which could follow instructions, and

- ChatGPT, which became an overnight sensation

RLHF offered better alignment with human preferences, higher accuracy, and less toxicity, allowing the LLMs to sound more natural.

From Turing to ChatGPT, we can divide the history of NLP into four eras. Each era evolved to address the shortcomings of an earlier era. The later techniques are more powerful but they require more computing power and data.

However, not all business problems require a cutting-edge AI solution. Sometimes, a simpler solution better addresses a specific business challenge. Selecting the right AI solution requires a combination of business art and science.

Many AI practitioners believe that because technology rapidly advances and breakthroughs can quickly become obsolete, we should only focus on the latest advancements. That is a mistake. On the contrary, understanding the history of NLP allows us to better understand the breadth of business applications of AI.

The best way to learn history is through storytelling. Stories capture our imagination. They involve people, striving for greatness, applying their ingenuity to advance the boundaries of human knowledge. We’ve only covered a small sample of stories. There are hundreds more.

It is important not to learn the history of AI for the sake of it. In this article, we focused on the business applications of AI.

Business leaders can advance their AI journey with three practical steps:

1. Identify an initial list of relevant business applications.

| Era | Initial Application | Representative Business Applications |

| Symbolic Era | ELIZA | Business rules engine |

| Statistical Era | IBM alignment models | Hedge fund trading |

| Neural Network Era | McCulloch-Pitts neuron | Computer vision; Speech to text |

| LLM Era | Generative pre-trained transformer | ChatGPT |

2. Remember that digital begins with people.

This article focused on the key people in each era. The technology came afterward.

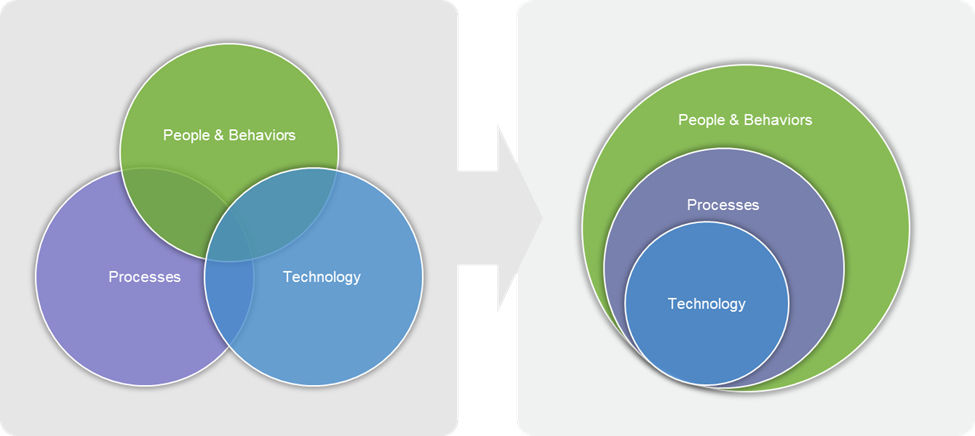

Transformations involve people, processes, and then technology. First focus on identifying the people who will lead the way. They should have both a foundational knowledge of how AI can address business challenges and a solid understanding of specific opportunities to improve your business operations.

Let those leaders develop new ways of working. Then implement technology at the core of those processes.

It’s cliché, but worth repeating: Digital transformation starts with people, and then processes, and then technology.

3. Don't start alone. Seek expert advice.

Even businesses that have the right AI leaders and sufficient organizational momentum, struggle with getting started. As a result, most firms seek expert advice from experienced AI practitioners to accelerate their AI journey.

For instance, an independent consultant or specialized boutique consultancy such as Coda Strategy can help with defining AI strategy, developing use cases, estimating return on investment, and building a roadmap. Then, an implementation firm or large systems integration firm can help execute that roadmap alongside your team.

As with any consulting firm, it’s important to identify the right mix of thought leadership, case studies with similar clients, and cultural fit for your organization, to propel you to AI success.

Post a comment Cancel reply

Related Posts

7 AI-Powered Apps on Mac for Small Businesses

If you own a small business it can be difficult to keep up with all…

Quantum Machine Learning | Connecting AI And Quantum Computing

Have you ever wondered if your future job might involve interacting with a device that…

How To Control IoT Devices | Managing The Future of Connectivity

Introduction To IoT Devices Your thermostat adjusting itself based on your schedule or your coffee…

Can Artificial Intelligence Replace Human Intelligence?

Imagine a world where AI brews your morning cup of joe before you even open…